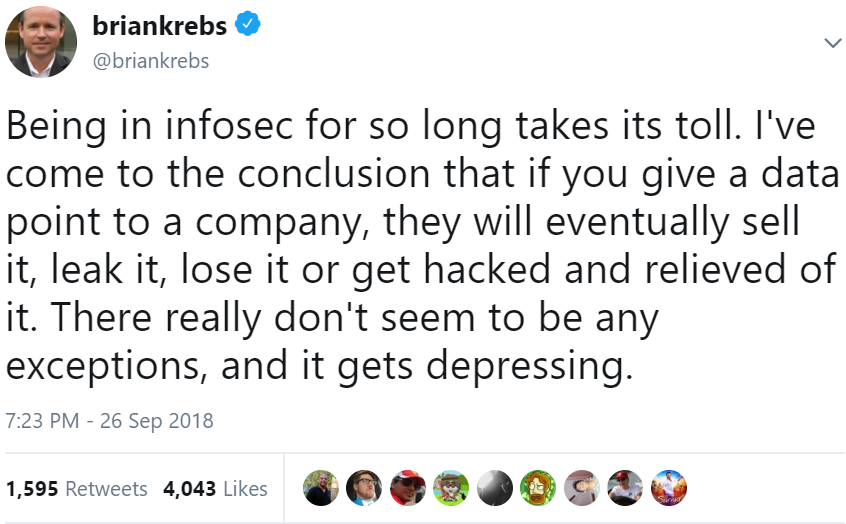

Earlier this month I spoke at a cybersecurity conference in Albany, N.Y. alongside Tony Sager, senior vice president and chief evangelist at the Center for Internet Security and a former bug hunter at the U.S. National Security Agency. We talked at length about many issues, including supply chain security, and I asked Sager whether he’d heard anything about rumors that Supermicro — a high tech firm in San Jose, Calif. — had allegedly inserted hardware backdoors in technology sold to a number of American companies.

Tony Sager, senior vice president and chief evangelist at the Center for Internet Security.

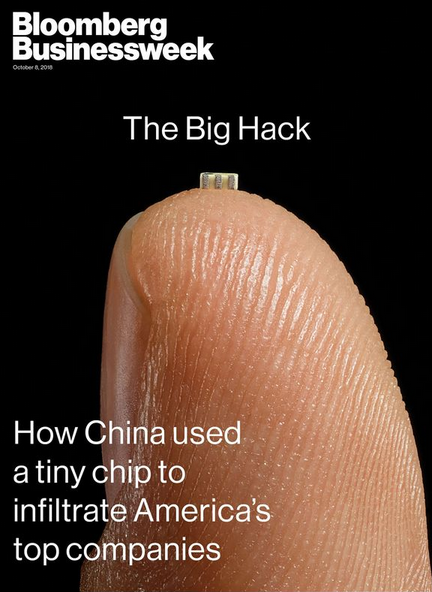

The event Sager and I spoke at was prior to the publication of Bloomberg Businessweek‘s controversial story alleging that Supermicro had duped almost 30 companies into buying backdoored hardware. Sager said he hadn’t heard anything about Supermicro specifically, but we chatted at length about the challenges of policing the technology supply chain.

Below are some excerpts from our conversation. I learned quite bit, and I hope you will, too.

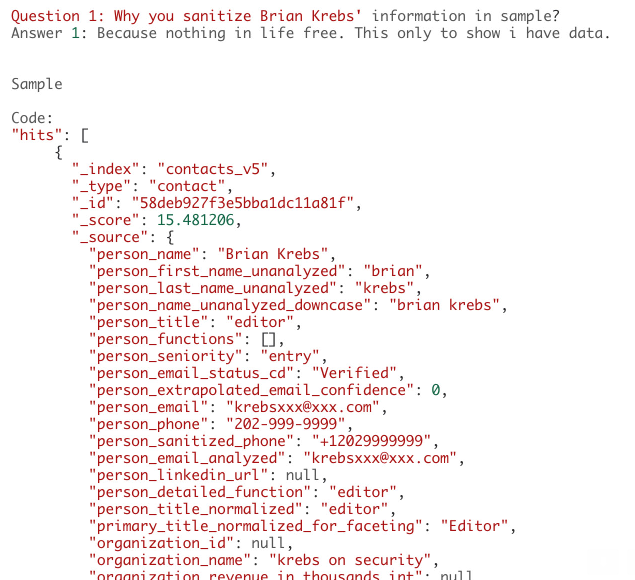

Brian Krebs (BK): Do you think Uncle Sam spends enough time focusing on the supply chain security problem? It seems like a pretty big threat, but also one that is really hard to counter.

Tony Sager (TS): The federal government has been worrying about this kind of problem for decades. In the 70s and 80s, the government was more dominant in the technology industry and didn’t have this massive internationalization of the technology supply chain.

But even then there were people who saw where this was all going, and there were some pretty big government programs to look into it.

BK: Right, the Trusted Foundry program I guess is a good example.

TS: Exactly. That was an attempt to help support a U.S.-based technology industry so that we had an indigenous place to work with, and where we have only cleared people and total control over the processes and parts.

BK: Why do you think more companies aren’t insisting on producing stuff through code and hardware foundries here in the U.S.?

TS: Like a lot of things in security, the economics always win. And eventually the cost differential for offshoring parts and labor overwhelmed attempts at managing that challenge.

BK: But certainly there are some areas of computer hardware and network design where you absolutely must have far greater integrity assurance?

TS: Right, and this is how they approach things at Sandia National Laboratories [one of three national nuclear security research and development laboratories]. One of the things they’ve looked at is this whole business of whether someone might sneak something into the design of a nuclear weapon.

The basic design principle has been to assume that one person in the process may have been subverted somehow, and the whole design philosophy is built around making sure that no one person gets to sign off on what goes into a particular process, and that there is never unobserved control over any one aspect of the system. So, there are a lot of technical and procedural controls there.

But the bottom line is that doing this is really much harder [for non-nuclear electronic components] because of all the offshoring now of electronic parts, as well as the software that runs on top of that hardware.

BK: So is the government basically only interested in supply chain security so long as it affects stuff they want to buy and use?

TS: The government still has regular meetings on supply chain risk management, but there are no easy answers to this problem. The technical ability to detect something wrong has been outpaced by the ability to do something about it.

BK: Wait…what?

TS: Suppose a nation state dominates a piece of technology and in theory could plant something inside of it. The attacker in this case has a risk model, too. Yes, he could put something in the circuitry or design, but his risk of exposure also goes up.

Could I as an attacker control components that go into certain designs or products? Sure, but it’s often not very clear what the target is for that product, or how you will guarantee it gets used by your target. And there are still a limited set of bad guys who can pull that stuff off. In the past, it’s been much more lucrative for the attacker to attack the supply chain on the distribution side, to go after targeted machines in targeted markets to lessen the exposure of this activity.

BK: So targeting your attack becomes problematic if you’re not really limiting the scope of targets that get hit with compromised hardware.

TS: Yes, you can put something into everything, but all of a sudden you have this massive big data collection problem on the back end where you as the attacker have created a different kind of analysis problem. Of course, some nations have more capability than others to sift through huge amounts of data they’re collecting.

BK: Can you talk about some of the things the government has typically done to figure out whether a given technology supplier might be trying to slip in a few compromised devices among an order of many?

TS: There’s this concept of the “blind buy,” where if you think the threat vector is someone gets into my supply chain and subverts the security of individual machines or groups of machines, the government figures out a way to purchase specific systems so that no one can target them. In other words, the seller doesn’t know it’s the government who’s buying it. This is a pretty standard technique to get past this, but it’s an ongoing cat and mouse game to be sure.

BK: I know you said before this interview that you weren’t prepared to comment on the specific claims in the recent Bloomberg article, but it does seem that supply chain attacks targeting cloud providers could be very attractive for an attacker. Can you talk about how the big cloud providers could mitigate the threat of incorporating factory-compromised hardware into their operations?

TS: It’s certainly a natural place to attack, but it’s also a complicated place to attack — particularly the very nature of the cloud, which is many tenants on one machine. If you’re attacking a target with on-premise technology, that’s pretty simple. But the purpose of the cloud is to abstract machines and make more efficient use of the same resources, so that there could be many users on a given machine. So how do you target that in a supply chain attack?

BK: Is there anything about the way these cloud-based companies operate….maybe just sheer scale…that makes them perhaps uniquely more resilient to supply chain attacks vis-a-vis companies in other industries?

TS: That’s a great question. The counter positive trend is that in order to get the kind of speed and scale that the Googles and Amazons and Microsofts of the world want and need, these companies are far less inclined now to just take off-the-shelf hardware and they’re actually now more inclined to build their own.

BK: Can you give some examples?

TS: There’s a fair amount of discussion among these cloud providers about commonalities — what parts of design could they cooperate on so there’s a marketplace for all of them to draw upon. And so we’re starting to see a real shift from off-the-shelf components to things that the service provider is either designing or pretty closely involved in the design, and so they can also build in security controls for that hardware. Now, if you’re counting on people to exactly implement designs, you have a different problem. But these are really complex technologies, so it’s non-trivial to insert backdoors. It gets harder and harder to hide those kinds of things.

BK: That’s interesting, given how much each of us have tied up in various cloud platforms. Are there other examples of how the cloud providers can make it harder for attackers who might seek to subvert their services through supply chain shenanigans?

TS: One factor is they’re rolling this technology out fairly regularly, and on top of that the shelf life of technology for these cloud providers is now a very small number of years. They all want faster, more efficient, powerful hardware, and a dynamic environment is much harder to attack. This actually turns out to be a very expensive problem for the attacker because it might have taken them a year to get that foothold, but in a lot of cases the short shelf life of this technology [with the cloud providers] is really raising the costs for the attackers.

When I looked at what Amazon and Google and Microsoft are pushing for it’s really a lot of horsepower going into the architecture and designs that support that service model, including the building in of more and more security right up front. Yes, they’re still making lots of use of non-U.S. made parts, but they’re really aware of that when they do. That doesn’t mean these kinds of supply chain attacks are impossible to pull off, but by the same token they don’t get easier with time.

BK: It seems to me that the majority of the government’s efforts to help secure the tech supply chain come in the form of looking for counterfeit products that might somehow wind up in tanks and ships and planes and cause problems there — as opposed to using that microscope to look at commercial technology. Do you think that’s accurate?

TS: I think that’s a fair characterization. It’s a logistical issue. This problem of counterfeits is a related problem. Transparency is one general design philosophy. Another is accountability and traceability back to a source. There’s this buzzphrase that if you can’t build in security then build in accountability. Basically the notion there was you often can’t build in the best or perfect security, but if you can build in accountability and traceability, that’s a pretty powerful deterrent as well as a necessary aid.

BK: For example….?

TS: Well, there’s this emphasis on high quality and unchangeable logging. If you can build strong accountability that if something goes wrong I can trace it back to who caused that, I can trace it back far enough to make the problem more technically difficult for the attacker. Once I know I can trace back the construction of a computer board to a certain place, you’ve built a different kind of security challenge for the attacker. So the notion there is while you may not be able to prevent every attack, this causes the attacker different kinds of difficulties, which is good news for the defense.

BK: So is supply chain security more of a physical security or cybersecurity problem?

TS: We like to think of this as we’re fighting in cyber all the time, but often that’s not true. If you can force attackers to subvert your supply chain, they you first off take away the mid-level criminal elements and you force the attackers to do things that are outside the cyber domain, such as set up front companies, bribe humans, etc. And in those domains — particularly the human dimension — we have other mechanisms that are detectors of activity there.

BK: What role does network monitoring play here? I’m hearing a lot right now from tech experts who say organizations should be able to detect supply chain compromises because at some point they should be able to see truckloads of data leaving their networks if they’re doing network monitoring right. What do you think about the role of effective network monitoring in fighting potential supply chain attacks.

TS: I’m not so optimistic about that. It’s too easy to hide. Monitoring is about finding anomalies, either in the volume or type of traffic you’d expect to see. It’s a hard problem category. For the US government, with perimeter monitoring there’s always a trade off in the ability to monitor traffic and the natural movement of the entire Internet towards encryption by default. So a lot of things we don’t get to touch because of tunneling and encryption, and the Department of Defense in particular has really struggled with this.

Now obviously what you can do is man-in-the-middle traffic with proxies and inspect everything there, and the perimeter of the network is ideally where you’d like to do that, but the speed and volume of the traffic is often just too great.

BK: Isn’t the government already doing this with the “trusted internet connections” or Einstein program, where they consolidate all this traffic at the gateways and try to inspect what’s going in and out?

TS: Yes, so they’re creating a highest volume, highest speed problem. To monitor that and to not interrupt traffic you have to have bleeding edge technology to do that, and then handle a ton of it which is already encrypted. If you’re going to try to proxy that, break it out, do the inspection and then re-encrypt the data, a lot of times that’s hard to keep up with technically and speed-wise.

BK: Does that mean it’s a waste of time to do this monitoring at the perimeter?

TS: No. The initial foothold by the attacker could have easily been via a legitimate tunnel and someone took over an account inside the enterprise. The real meaning of a particular stream of packets coming through the perimeter you may not know until that thing gets through and executes. So you can’t solve every problem at the perimeter. Some things only because obvious and make sense to catch them when they open up at the desktop.

BK: Do you see any parallels between the challenges of securing the supply chain and the challenges of getting companies to secure Internet of Things (IoT) devices so that they don’t continue to become a national security threat for just about any critical infrastructure, such as with DDoS attacks like we’ve seen over the past few years?

TS: Absolutely, and again the economics of security are so compelling. With IoT we have the cheapest possible parts, devices with a relatively short life span and it’s interesting to hear people talking about regulation around IoT. But a lot of the discussion I’ve heard recently does not revolve around top-down solutions but more like how do we learn from places like the Food and Drug Administration about certification of medical devices. In other words, are there known characteristics that we would like to see these devices put through before they become in some generic sense safe.

BK: How much of addressing the IoT and supply chain problems is about being able to look at the code that powers the hardware and finding the vulnerabilities there? Where does accountability come in?

TS: I used to look at other peoples’ software for a living and find zero-day bugs. What I realized was that our ability to find things as human beings with limited technology was never going to solve the problem. The deterrent effect that people believed someone was inspecting their software usually got more positive results than the actual looking. If they were going to make a mistake – deliberately or otherwise — they would have to work hard at it and if there was some method of transparency, us finding the one or two and making a big deal of it when we did was often enough of a deterrent.

BK: Sounds like an approach that would work well to help us feel better about the security and code inside of these election machines that have become the subject of so much intense scrutiny of late.

TS: We’re definitely going through this now in thinking about the election devices. We’re kind of going through this classic argument where hackers are carrying the noble flag of truth and vendors are hunkering down on liability. So some of the vendors seem willing to do something different, but at the same time they’re kind of trapped now by the good intentions of open vulnerability community.

The question is, how do we bring some level of transparency to the process, but probably short of vendors exposing their trade secrets and the code to the world? What is it that they can demonstrate in terms of cost effectiveness of development practices to scrub out some of the problems before they get out there. This is important, because elections need one outcome: Public confidence in the outcome. And of course, one way to do that is through greater transparency.

BK: What, if anything, are the takeaways for the average user here? With the proliferation of IoT devices in consumer homes, is there any hope that we’ll see more tools that help people gain more control over how these systems are behaving on the local network?

TS: Most of [the supply chain problem] is outside the individual’s ability to do anything about, and beyond ability of small businesses to grapple with this. It’s in fact outside of the autonomy of the average company to figure it out. We do need more national focus on the problem.

It’s now almost impossible to for consumers to buy electronics stuff that isn’t Internet-connected. The chipsets are so cheap and the ability for every device to have its own Wi-Fi chip built in means that [manufacturers] are adding them whether it makes sense to or not. I think we’ll see more security coming into the marketplace to manage devices. So for example you might define rules that say appliances can talk to the manufacturer only.

We’re going to see more easy-to-use tools available to consumers to help manage all these devices. We’re starting to see the fight for dominance in this space already at the home gateway and network management level. As these devices get more numerous and complicated, there will be more consumer oriented ways to manage them. Some of the broadband providers already offer services that will tell what devices are operating in your home and let users control when those various devices are allowed to talk to the Internet.

Since Bloomberg’s story broke, The U.S. Department of Homeland Security and the National Cyber Security Centre, a unit of Britain’s eavesdropping agency, GCHQ, both came out with statements saying they had no reason to doubt vehement denials by Amazon and Apple that they were affected by any incidents involving Supermicro’s supply chain security. Apple also penned a strongly-worded letter to lawmakers denying claims in the story.

Meanwhile, Bloomberg reporters published a follow-up story citing new, on-the-record evidence to back up claims made in their original story.

from

https://krebsonsecurity.com/2018/10/supply-chain-security-101-an-experts-view/

According to the most recent statistics from the FBI‘s

According to the most recent statistics from the FBI‘s

The zero-day bug —

The zero-day bug —

In the context of computer and Internet security, supply chain security refers to the challenge of validating that a given piece of electronics — and by extension the software that powers those computing parts — does not include any extraneous or fraudulent components beyond what was specified by the company that paid for the production of said item.

In the context of computer and Internet security, supply chain security refers to the challenge of validating that a given piece of electronics — and by extension the software that powers those computing parts — does not include any extraneous or fraudulent components beyond what was specified by the company that paid for the production of said item.